AI Engineering in the real world

👋 Hi, this is Gergely with a subscriber-only issue of the Pragmatic Engineer Newsletter. In every issue, I cover challenges at Big Tech and startups through the lens of engineering managers and senior engineers. If you’ve been forwarded this email, you can subscribe here. AI Engineering in the real worldWhat does AI engineering look like in practice? Hands-on examples and learnings from software engineers turned “AI engineers” at seven companiesAI Engineering is the hottest new engineering field, and it’s also an increasingly loaded phrase which can mean a host of different things to different people. It can refer to ML engineers building AI models, or data scientists and analysts working with large language models (LLMs), or software engineers building on top of LLMs, etc. To make things more confusing, some AI tooling vendors use the term for their products, like Cognition AI naming theirs an “AI engineer.” In her new book “AI Engineering,” author Chip Huyen defines AI engineering as building applications that use LLMs, placing it between software engineering (building software products using languages, frameworks, and APIs), and ML engineering (building models for applications to use). Overall, AI engineering feels closer to software engineering because it usually starts with software engineers building AI applications using LLM APIs. The more complex the AI engineering use case, the more it can morph into looking like ML engineering. Today, we focus on software engineers who have switched to being AI engineers. These are devs who work with LLMs, and build features and products on top of them. We cover:

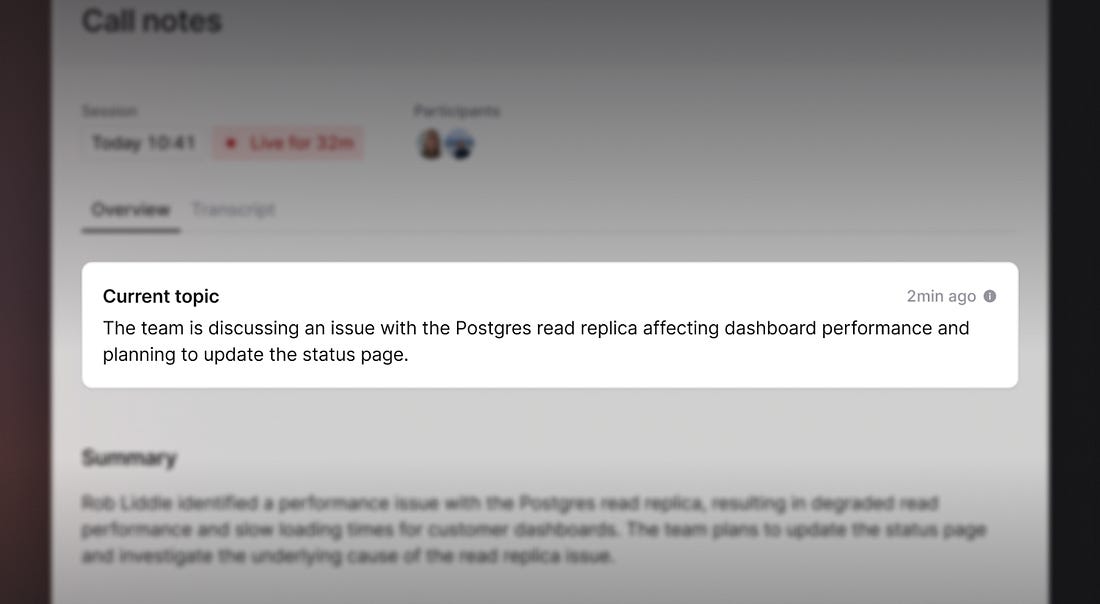

Note: I am an investor in incident.io and Wordsmith, which both share details in this deepdive. I always focus on being unbiased in these articles – but at the same time, being an investor means these companies share otherwise hard-to-obtain details. I was not paid by any business to mention them in this article. See my ethics statement for more. Thanks to the engineers contributing to this deepdive: Lawrence Jones (senior staff engineer at incident.io), Tillman Elser (AI lead at Sentry), Ross McNairn (cofounder at Wordsmith AI), Igor Ostrovsky (cofounder at Augment Code), Matt Morgis (Staff Engineer, AI, formerly at Elsevier), Ashley Peacock (Staff Engineer at Simply Business), and Ryan Cogswell (Application Architect at DSI). The bottom of this article could be cut off in some email clients. Read the full article uninterrupted, online. 1. What are companies building?Incident.io: incident note taker and botIncident.io is a tool to help resolve outages as they happen. A few months ago, the engineering team went back to the drawing board to build features that capitalize on how much LLMs have improved to date. Two of these features:

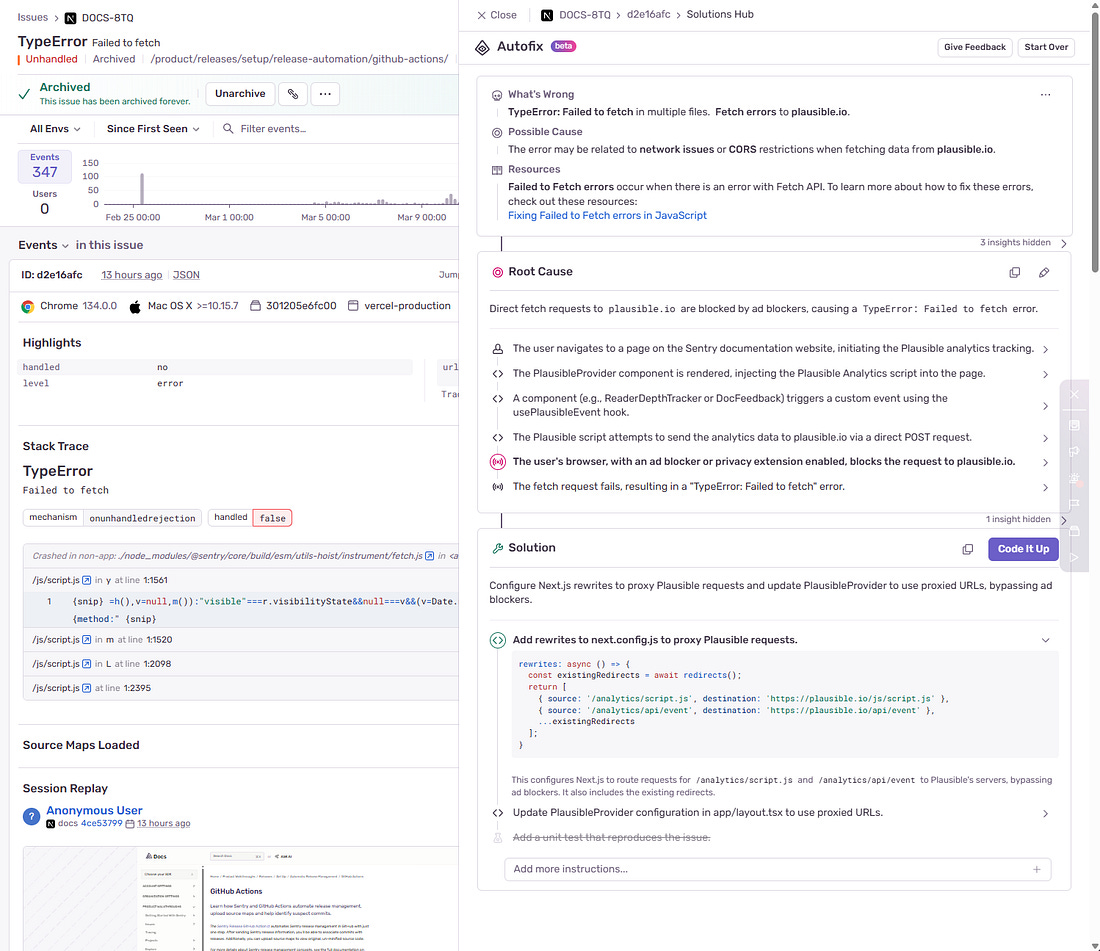

Both these features make heavy use of LLMs, and also integrate with several other systems like backend services, Slack, etc. Sentry: Autofix and issue groupingSentry is a popular application monitoring software. Two interesting projects they built:

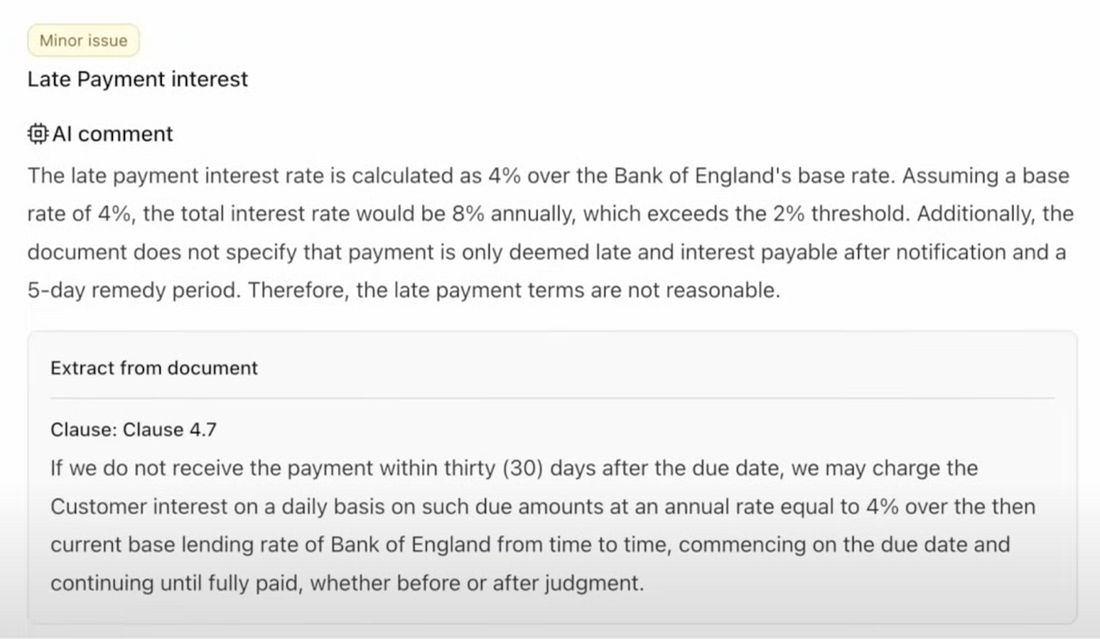

Both these features are fair source, meaning you can see exactly how they work. Wordsmith: legal AIWordsmith is building AI tools that legal teams use, including:

Augment CodeAugment Code is an AI coding assistant for engineering teams and large codebases. This kind of product probably needs little introduction for devs:

Elsevier: RAG platformElsevier is one of the world’s leading publishers of scientific and medical content. Matt Morgis was an engineering manager at the company when the engineering leadership noticed that several product teams were independently implementing RAG capabilities; each sourcing content, parsing it, chunking it, and creating embeddings. An enterprise-wide RAG platform was the solution Matt and his team built, to enable multiple teams to build AI-powered products for medical and scientific professionals. Their platform consists of:

Products built on top of this platform:

Insurance company: chatbotAshley Peacock is a staff software engineer at the insurer, Simply Business, who built a pretty common use case: a chatbot for customers to get answers to questions. This seems like the simplest of use cases – you might assume it just involves connecting documentation for the chatbot to use – but it was surprisingly challenging because:

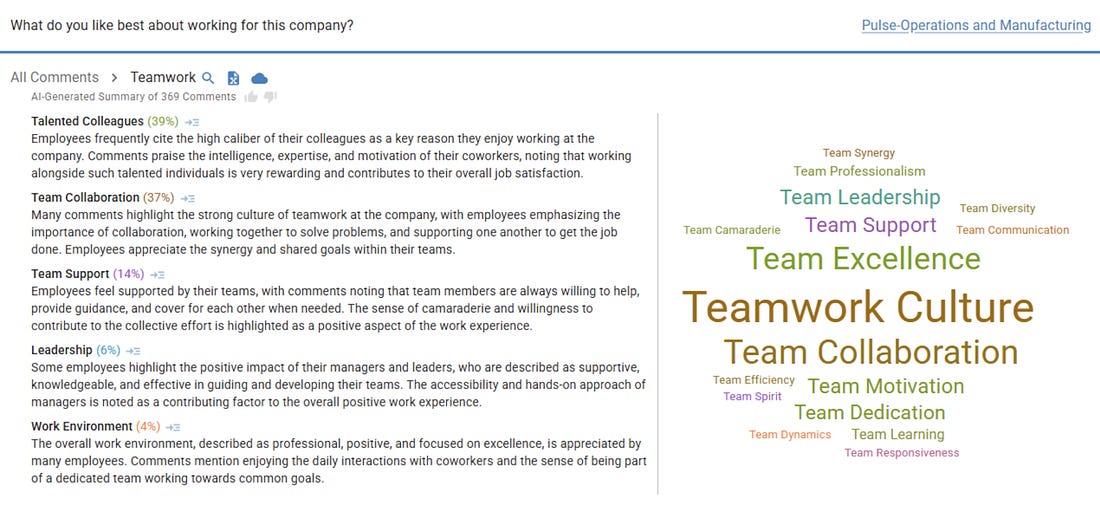

The team had the idea to create an “approved answers” knowledge base for the chatbot, and faced the challenge of creating the questions for this. The team made the chatbot state when it cannot answer a question, and to then connect with human support, which then updated the knowledge base with their solution. This approach works pretty well after taking a few iterations to get right. HR SaaS: summarization featuresData Solutions International (DSI) is a 30-person HR tech company with 5 engineers, selling products that help with performance review processes, assessments, and employee engagement surveys. The company is family-owned, has been operating for 27 years, and is profitable. Summarizing comments for employee engagement processes was the first feature they wanted to build, as something customers would appreciate, and which the team could learn about working with LLMs from. During an employee engagement process, there are questions like "what do you like most about working here", and "if you could change one thing about working at Company X, what would it be?", etc. For larger companies with thousands of employees, there may be thousands of comments per question. Individual departmental leads might read all comments relevant to them, but there’s no way an HR team at a very large business could check every single comment. Before LLMs, such comments were categorized into predefined categories, which were hardcoded, per company and per survey. This was okay, but not great. Data Solutions International’s goal was to use LLMs to summarize a large number of comments, and report to survey admins the broad categories which comments belong in, how many comments per category there are, and to allow drilling down into the data. 2. Onboarding to AI Engineering as a software engineerSo, how do you get started building applications with LLMs as a software engineer transitioning to this new field? Some advice from folks at the above companies who have: You can teach AI yourself – and probably shouldLet’s start with an encouraging story from veteran software engineer Ryan Cogswell, at HR tech company, DSI. He joined the company 25 years ago, and was the first engineering hire. When AI tools came along, DSI decided to build a relatively simple first AI feature in their HR system that summarized comments for employee engagement purposes. Neither Ryan nor any of the other 4 devs had expertise in AI and LLMs, so the company contracted an external agency which offered a fixed, timeboxed offer to scope the project. Here’s how it went:

The proposal was for a really complex architecture:

The agency quoted 6-9 months to build the relatively small feature (!!), and an estimated operational cost higher than DSI’s entire investment in all their infrastructure! This was when Ryan asked how hard it could be to build it themselves, and got to work reading and prototyping, making himself the company’s resident GenAI expert. In 2 months, Ryan and a couple of colleagues built the feature for a fraction of the cost to operate than the agency’s quote. His tech stack choice:

Get used to non-deterministic outputsRoss McNairn is cofounder and CEO of the startup, Wordsmith, which several software engineers have joined. You need to rethink how to think about things, he says:

Switching to in-house can be easier, even for EMsMatt Morgis was an engineering manager at Elsevier who decided to transition back to staff engineer, specifically to work on AI:

The transition was successful, and today Matt is a staff engineer focusing on GenAI at CVS Health. 3. Tech stackHere’s the tech stack which various companies used. There’s no right or wrong tech stack – what follows is for context and inspiration, not a blueprint. Incident.ioThe stack:

SentryIn-house LLM agent tooling: the team evaluated and rejected using a tool like the LangChain framework to integrate LLMs with other data sources and into workflows. It was a lot more work to build their own, but the upsides are that the architecture and code are more in-line with abstractions and design patterns in Sentry’s existing codebase. The company used the following languages and frameworks to build this tooling:

Legal AI startup WordsmithThe stack:

Multi-cloud providers:

Azure and GCP each have business models for locking in customers; Microsoft is the only major cloud provider offering OpenAI models, and only GCP offers Gemini. The company routes to different model by use case:

AI coding assistant, Augment CodeThe stack to build and run LLMs:

RAG platform at scientific publisher ElsevierThe scientific publisher used this stack to build their in-house RAG platform:

Chatbot for insurance company Simply BusinessA pretty simple stack:

Summarization at HR tech DSIAs covered above, Ryan took the initiative at DSI by building a simpler solution than the one an AI vendor proposed. DSI ended up with:

Tech stack trends across companiesThe seven businesses in this article are all different, but there are some common trends:

4. Engineering challengesWhat unusual or new challenges does AI Engineering pose for more “traditional” software engineers? The most common ones mentioned:... Subscribe to The Pragmatic Engineer to unlock the rest.Become a paying subscriber of The Pragmatic Engineer to get access to this post and other subscriber-only content. A subscription gets you:

|

Comments

Post a Comment