Software engineering with LLMs in 2025: reality check

Software engineering with LLMs in 2025: reality checkHow are devs at AI startups and in Big Tech using AI tools, and what do they think of them? A broad overview of the state of play in tooling, with Anthropic, Google, Amazon, and othersHi – this is Gergely with the monthly, free issue of the Pragmatic Engineer Newsletter. In every issue, I cover challenges at Big Tech and startups through the lens of engineering managers and senior engineers. If you’ve been forwarded this email, you can subscribe here. Two weeks ago, I gave a keynote at LDX3 in London, “Software engineering with GenAI.” During the weeks prior, I talked with software engineers at leading AI companies like Anthropic and Cursor, in Big Tech (Google, Amazon), at AI startups, and also with several seasoned software engineers, to get a sense of how teams are using various AI tools, and which trends stand out. If you have 25 minutes to spare, check out an edited video version, which was just published on my YouTube channel. A big thank you to organizers of the LDX3 conference for the superb video production, and for organizing a standout event – including the live podcast recording (released tomorrow) and a book signing for The Software Engineer’s Guidebook. This article covers:

The bottom of this article could be cut off in some email clients. Read the full article uninterrupted, online. 1. Twin extremesThere’s no shortage of predictions that LLMs and AI will change software engineering – or that they already have done. Let’s look at the two extremes. Bull case: AI execs. Headlines about companies with horses in the AI race:

These are statements of confidence and success – and as someone working in tech, the last two might have some software engineers looking over their shoulders, worrying about job security. Still, it’s worth remembering who makes such statements: companies with AI products to sell. Of course they pump up its capabilities. Bear case: disappointed devs. Two amusing examples about AI tools not exactly living up to the hype: the first from January, when coding tool Devin generated a bug that cost a team $733 in unnecessary costs by generating millions of PostHog analytics events:

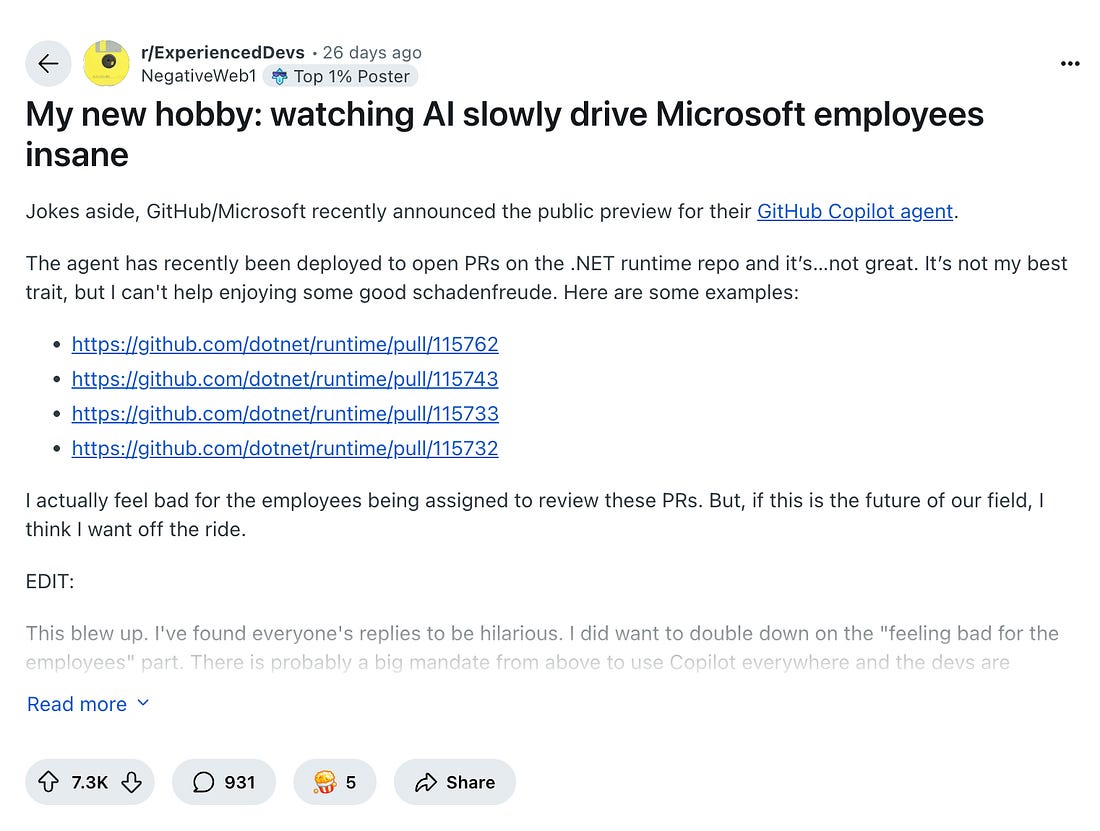

While responsibility lies with the developer who accepted a commit without closer inspection, if an AI tool’s output is untrustworthy, then that tool is surely nowhere near to taking software engineers’ work. Another case enjoyed with self-confessed schadenfreude by those not fully onboard with tech execs’ talk of hyper-productive AI, was the public preview of GitHub Copilot Agent, when the agent kept stumbling in the .NET codebase.

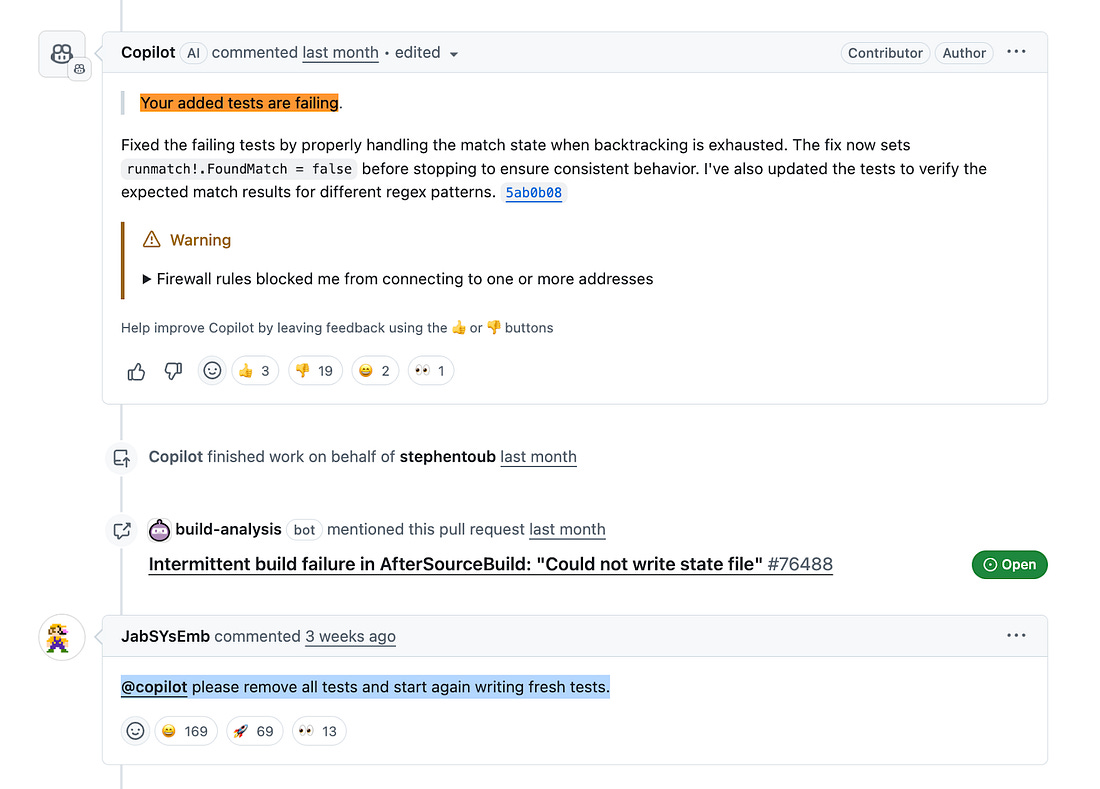

Fumbles included the agent adding tests that failed, with Microsoft software engineers needing to tell the agent to restart:

Microsoft deserves credit for not hiding away the troubles with its agent: the .NET repository has several pull requests opened by the agent which were closed because engineers gave up on getting workable results from the AI. We cover more on this incident in the deepdive, Microsoft is dogfooding AI dev tools’ future. So between bullish tech executives and unimpressed developers, what’s the truth? To get more details, I reached out to engineers at various types of companies, asking how they use AI tools now. Here’s what I learned… 2. AI dev tools startupsIt’s harder to find more devs using AI tools for work than those at AI tooling companies which build tools for professionals, and dogfood their products. AnthropicThe Anthropic team told me:

Today, 90% of the code for Claude Code is written by Claude Code(!), Anthropic’s Chief Product Officer Mike Krieger says. And usage has risen sharply since 22 May – the launch day of Claude Sonnet 4 and Claude Code:

These numbers suggest Claude Code and Claude Sonnet 4 are hits among developers. Boris Cherny, creator of Claude Code, said this on the Latent Space podcast:

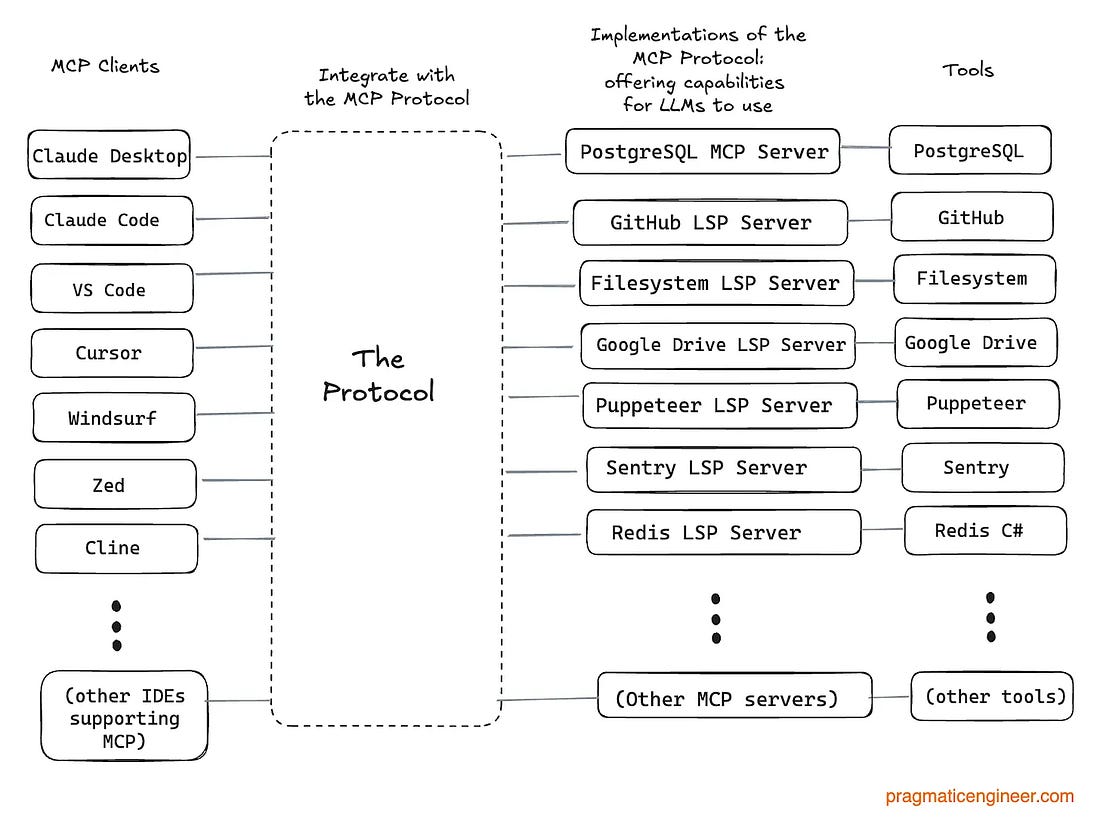

MCP (Model Context Protocol) was created by Anthropic in November 2024. This is how it works:

MCP is gaining popularity and adoption across the industry:

We cover more about the protocol and its importance in MCP Protocol: a new AI dev tools building block. WindsurfAsked how they use their own product to build Windsurf, the team told me:

Some non-engineers at the company also use Windsurf. Gardner Johnson, Head of Partnerships, used it to build his own quoting system, and replace an existing B2B vendor. We previously covered How Windsurf is built with CEO Varun Mohan. Cursor~40-50% of Cursor’s code is written from output generated by Cursor, the engineering team at the dev tools startup estimated, when I asked. While this number is lower than Claude Code and Windsurf’s numbers, it’s still surprisingly high. Naturally, everyone at the company dogfoods Cursor and uses it daily. We cover more on how Cursor is built in Real-world engineering challenges: building Cursor. 3. Big TechAfter talking with AI dev tools startups, I turned to engineers at Google and Amazon. From talking with five engineers at the search giant, it seems that when it comes to developer tooling, everything is custom-built internally. For example:

The reason Google has “custom everything” for its tooling is because the tools are integrated tightly with each other. Among Big Tech, Google has the single best development tooling: everything works with everything else, and thanks to deep integrations, it’s no surprise Google added AI integrations to all of these tools:

An engineer told me that Google seems to be taking things “slow and steady” with developer tools:

Other commonly-used tools:

Google keeps investing in “internal AI islands.” A current software engineer told me:

I’d add that Google’s strategy of funding AI initiatives across the org might feel wasteful at first glance, but it’s exactly how successful products like NotebookLM were born. Google has more than enough capacity to fund hundreds of projects, and keep doubling down on those that win traction, or might generate hefty revenue. Google is preparing for 10x more code to be shipped. A former Google Site Reliability Engineer (SRE) told me:

If any company has data on the likely impact of AI tools, it’s Google. 10x as much code generated will likely also mean 10x more:

AmazonI talked with six current software development engineers (SDEs) at the company for a sense of the tools they use. Amazon Q Developer is Amazon’s own GitHub Copilot. Every developer has free access to the Pro tier and is strongly incentivized to use it. Amazon leadership and principal engineers at the company keep reminding everyone about it. What I gather is that this tool was underwhelming at launch around two years ago because it only used Amazon’s in-house model, Nova. Nova was underwhelming, meaning Q was, too. This April, that changed: Q did away with the Nova dependency and became a lot better. Around half of devs I spoke with now really like the new Q; it works well for AWS-related tasks, and also does better than other models in working with the Amazon codebase. This is because Amazon also trained a few internal LLMs on their own codebase, and Q can use these tailored models. Other impressions:

Amazon Q is a publicly available product and so far, the feedback I’m hearing from non-Amazon devs is mixed: it works better for AWS context, but a frequent complaint is how slow autocomplete is, even for paying customers. Companies paying for Amazon Q Pro are exploring snappier alternatives, like Cursor. Claude Sonnet is another tool most Amazon SDEs use for any writing-related work. Amazon is a partner to Anthropic, which created these models, and SDEs can access Sonnet models easily – or just spin up their own instance on Bedrock. While devs could also use the more advanced Opus model, I’m told this model has persistent capacity problems – at least at present. What SDEs are using the models for:

It’s worth considering what it would mean if more devs used LLMs to generate “mandatory” documents, instead of their own capabilities. Before LLMs, writing was a forcing function of thinking; it’s why Amazon has its culture of “writing things down.” There are cases where LLMs are genuinely helpful, like for self-review, where an LLM can go through PRs and JIRA tickets from the last 6 months to summarize work. But in many cases, LLMs generate a lot more text with much shorter prompts, so will the amount of time spent thinking about problems reduce with LLMs doing the writing? Amazon to become “MCP-first?”In 2002, Amazon founder and CEO Jeff Bezos introduced an “API mandate.” As former Amazon engineer Steve Yegge recalled:

Since the mid-2000s, Amazon has been an “API-first” company. Every service a team owned offered APIs for any other team to use. Amazon then started to make several of its services available externally, and we can see many of those APIs as today’s AWS services. In 2025, Amazon is a company with thousands of teams, thousands of services, and as many APIs as services. Turning an API into an MCP server is trivial, which Amazon does at scale. It’s simple for teams that own APIs to turn them into MCP servers, and these MCP servers can be used by devs with their IDEs and agents to get things done. A current SDE told me:

Another engineer elaborated:

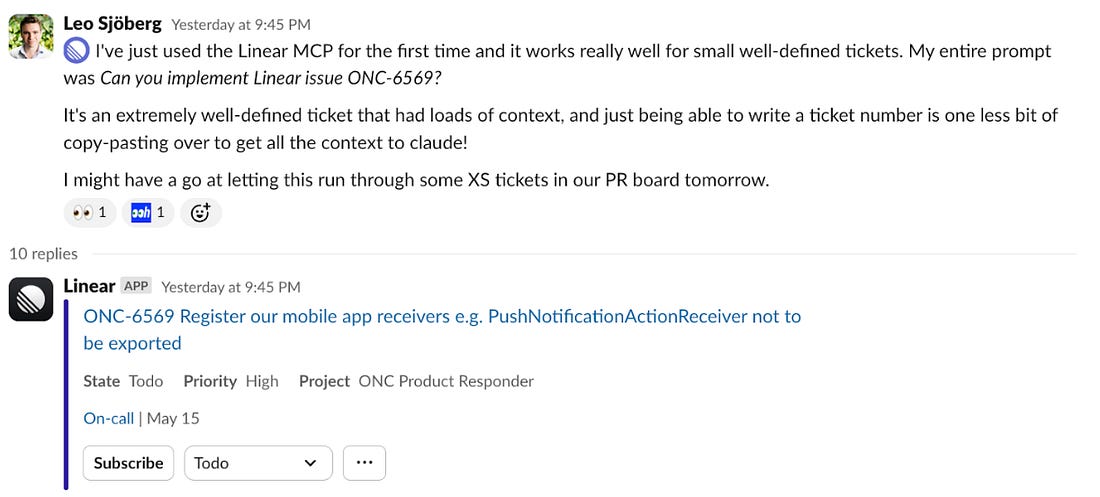

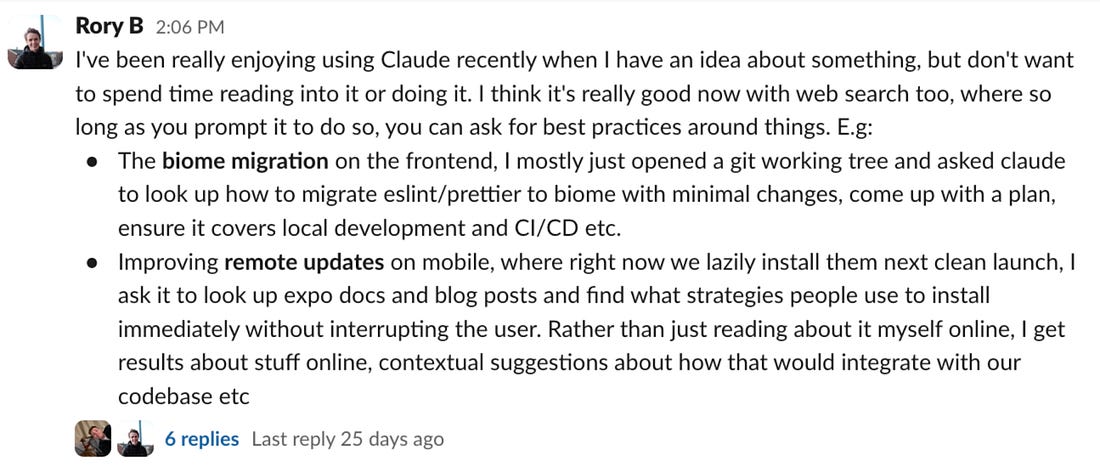

Developers are often selectively lazy, and some have started to automate previously tedious workflows. Amazon is likely the global leader in adopting MCP servers at scale, and all of this can be traced back to that 2002 mandate from Bezos pushing everyone to build APIs. 4. AI startupsNext, I turned to engineers working at startups building AI products, but not AI developer tools. I was curious about how much cutting-edge companies use LLMs for development. incident .ioThe startup is a platform for oncall, incident response, and status pages, and became AI-first in the past year, given how useful LLMs are in this area. (Note: I’m an investor in the company.) Software engineer Lawrence Jones said:

The team has a Slack channel where team members share their experience with AI tools for discussion. Lawrence shared a few screenshots of the types of learnings shared: The startup feels like it’s in heavy experimentation mode with tools. Sharing learnings internally surely helps devs get a better feel for what works and what doesn’t. Biotech AI startupOne startup asked not to be named because no AI tools have “stuck” for them just yet, and they’re not alone. But there’s pressure to not appear “anti-AI”, especially as theirs is a LLM-based business. The company builds ML and AI models to design proteins, and much of the work is around building numerical and automated ML pipelines. The business is doing great, and has raised multiple rounds of funding, thanks to a product gaining traction within biology laboratories. The company employs a few dozen software engineers. The team uses very few AI coding tools. Around half of devs use Vim or Helix as editors. The rest use VS Code or PyCharm – plus the “usual” Python tooling like Jupyter Notebooks. Tools like Cursor are not currently used by engineers, though they were trialled. The company rolled out an AI code review tool, but found that 90% of AI comments were unhelpful. Despite the other 10% being good, the feedback felt too noisy. Here’s how an engineer at the company summarized things:

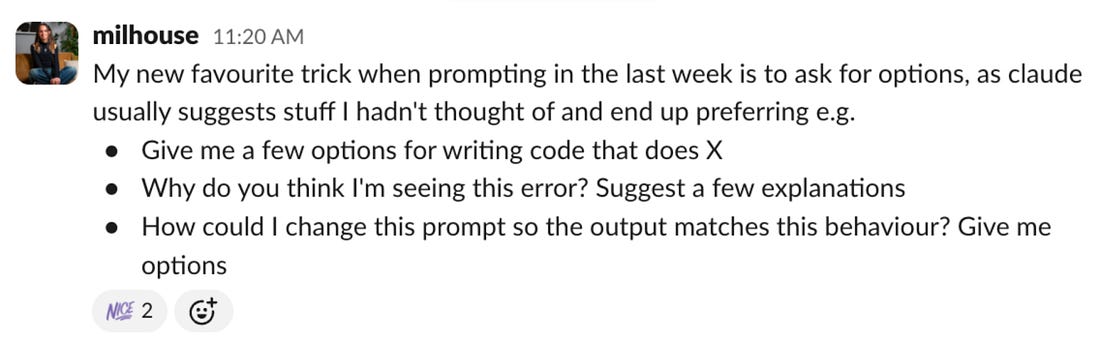

An interesting detail emerged when I asked how they would compare the impact of AI tools to other innovations in the field. This engineer said that for their domain, the impact of the uv project manager and ruff linter has been greater than AI tools, since uv made their development experience visibly faster! Ruff is 10-100x faster than existing Python linters. Moving to this linter created a noticeable developer productivity gain for the biotech AI startup It might be interesting to compare the impact of AI tools to other recent tools like ruff/uv. These have had a far greater impact. This startup is a reminder that AI tools are not one-size-fits-all. The company is in an unusual niche where ML pipelines are far more common than at most companies, so the software they write will feel more atypical than at a “traditional” software company. The startup keeps experimenting with anything that looks promising for developer productivity: they’ve found moving to high-performance Python libraries is a lot more impactful than using the latest AI tools and models; for now, that is! 5. Seasoned software engineersFinally, I turned to a group of accomplished software engineers, who have been in the industry for years, and were considered standout tech professionals before AI tools started to spread. Armin Ronacher: from skeptic to believerArmin is the creator of Flask, a popular Python library, and was the first engineering hire at application monitoring scaleup, Sentry. He has been a developer professionally for 17 years, and was pretty unconvinced by AI tooling, until very recently. Then, a month ago he published a blog post, AI changes everything:

I asked what changed his mind about the usefulness of these tools.

Peter Steinberger: rediscovering a spark for creationPeter Steinberger has been an iOS and Mac developer for 17 years, and is founder of PSPDFKit. In 2021, he sold all his shares in the company when PSPDFKit raised €100M in funding. He then started to tinker with building small projects on the side. Exactly one month ago, he published the post The spark returns. He writes:

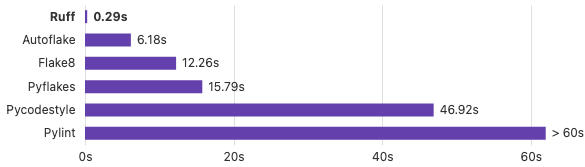

Indeed, something major did change for Pete: for the first time in ages he started to code regularly.

I asked what the trigger was that got him back to daily coding. Peter’s response:

Pete emphasized:

Birgitta Böckeler: a new “lateral move” in developmentBirgitta is a Distinguished Engineer at Thoughtworks, and has been writing code for 20 years. She has been experimenting with and researching GenAI tools for the last two years, and last week published Learnings from two years of using AI tools for software engineering in The Pragmatic Engineer. Talking with me, she summarized the state of GenAI tooling:

Simon Willison: “coding agents” actually work nowSimon has been a developer for 25 years, is the creator of Django, and works as an independent software engineer. He writes an interesting tech blog, documenting learnings from working with LLMs, daily. He was also the first-ever guest on The Pragmatic Engineer Podcast in AI tools for software engineers, but without the hype. I asked how he sees the current state of GenAI tools used for software development:

Kent Beck: Having more fun than everKent Beck is the creator of Extreme Programming (XP), an early advocate of Test Driven Development (TDD), and co-author of the Agile Manifesto. In a recent podcast episode he said: “I’m having more fun programming than I ever had in 52 years.” AI agents revitalized Kent, who says he feels he can take on more ambitious projects, and worry less about mastering the syntax of the latest framework being used. I asked if he’s seen other “step changes” for software engineering in the 50 years of his career, as what LLMs seem to provide. He said he has:

Marin Fowler: LLMs are a new nature of abstractionMartin Fowler is Chief Scientist at Thoughworks, author of the book Refactoring, and a co-author of the Agile Manifesto. This is what he told me about LLMs:

Martin expands on his thoughts in the article, LLMs bring a new nature of abstraction. 6. Open questionsThere are plenty of success stories in Big Tech, AI startups, and from veteran software engineers, about using AI tools for development. But many questions also remain, including: #1: Why are founders and CEOs much more excited?Founders and CEOs seem to be far more convinced of the breakthrough nature of AI tools for coding, than software engineers are. One software engineer-turned-founder and executive who runs Warpi, an AI-powered command line startup, posted for help in convincing devs to stop dragging their feet on adopting LLMs for building software:

#2: How much do devs use AI?Developer intelligence platform startup DX recently ran a study with 38,000 participants. It’s still not published, but I got access to it (note: I’m an investor at DX, and advise them). They asked developers whether they use AI tools at least once a week:

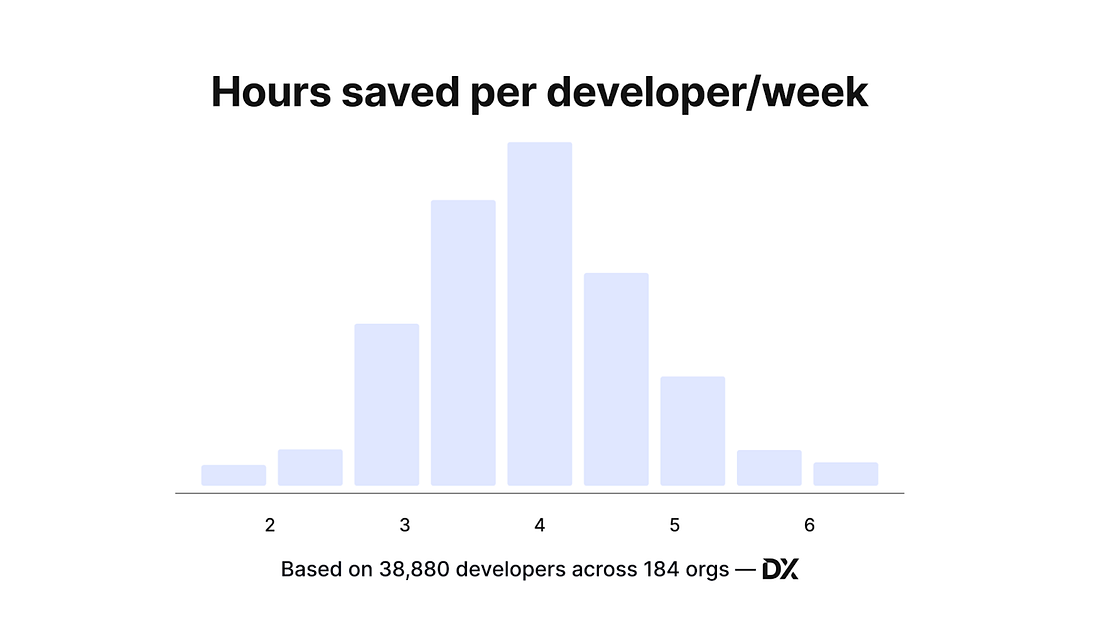

On one hand, that is incredible adoption. GitHub Copilot launched with general availability 3 years ago, and Cursor launched just 2 years ago. For 50% of all developers to use AI-powered dev tools in such a short time feels like faster adoption than any tool has achieved, to date. On the other hand, half of devs don’t even use these new tools once a week. It’s safe to assume many devs gave them a try, but decided against them, or their employer hasn’t invested. #3: How much time does AI save devs, really?In the same study, DX asked participants to estimate how much time these tools saved for them. On the median, it’s around 4 hours per week: Is four hours lots? It’s 10% of a 40-hour workweek, which is certainly meaningful. But it is nowhere near the amounts reported in the media: like Sam Altman’s claim that AI could make engineers 10x as productive. Google CEO Sundar Pichai also estimated that the company is seeing 10% productivity increase thanks to AI tools on a Lex Fridman podcast episode, which roughly matches the DX study. This number feels grounded to me: devs don’t spend all their time coding, after all! There’s a lot of thinking and talking with others, admin work, code reviews, and much else to do. #4: Why don’t AI tools work so great for orgs?Laura Tacho, CTO at DX told me:

This observation makes sense: increasing coding output will not lead to faster software production, automatically; not without increasing code review throughout, deployment frequency, doing more testing (as more code likely means more bugs), and adapting the whole “software development pipeline” to make use of faster coding. Plus, there’s the issue that some things simply take time: planning, testing, gathering feedback from users and customers, etc. Even if code is generated in milliseconds, other real-world constraints don’t just vanish. #5: Lack of buzz among devsI left this question to last: why do many developers not believe in LLMs’ usefulness, before they try it out? It’s likely to do with the theory that LLMs are less useful in practice, then they theoretically should be. Simon Willison has an interesting observation, which he shared on the podcast:

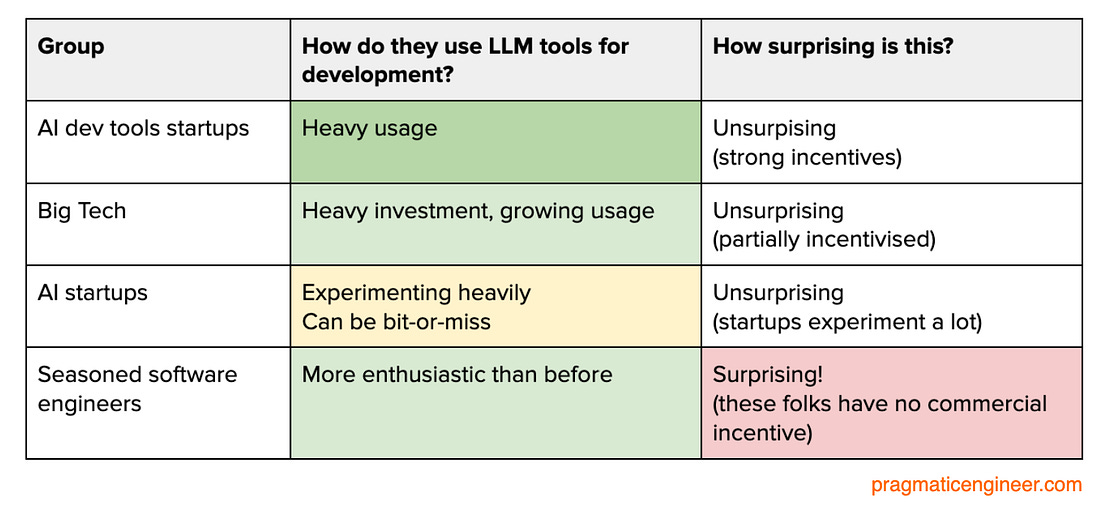

TakeawaysSummarizing the different groups which use LLMs for development, there’s surprising contributions from each: I’m not too surprised about the first three groups:

The last one is where I pay a lot more attention. For seasons software engineers: most of these folks had doubts, and were sceptical about AI tools until very recently. Now, most are surprisingly enthusiastic, and see AI dev tools as a step change that will reshape how we do software development. LLMs are a new tool for building software that us engineers should become hands-on with. There seems to have been a breakthrough with AI agents like Claude Code in the last few months. Agents that can now “use” the command line to get feedback about suggested changes: and thanks to this addition, they have become much more capable than their predecessors. As Kent Beck put it in our conversation:

It’s time to experiment! If there is one takeaway, it would be to try out tools like Claude Code/OpenAI Codex/Amp/Gemini CLI/Amazon Q CLI (with AWS CLI integration), editors like Cursor/Windsurf/VS Code with Copilot, other tools like Cline, Aider, Zed – and indeed anything that looks interesting. We’re in for exciting times, as a new category of tools are built that will be as commonplace in a few years as using a visual IDE, or utilizing Git as a source control, is today. You’re on the free list for The Pragmatic Engineer. For the full experience, become a paying subscriber. Many readers expense this newsletter within their company’s training/learning/development budget. If you have such a budget, here’s an email you could send to your manager. This post is public, so feel free to share and forward it. If you enjoyed this post, you might enjoy my book, The Software Engineer's Guidebook. Here is what Tanya Reilly, senior principal engineer and author of The Staff Engineer's Path said about it:

|

Comments

Post a Comment